dissect.csail.mit.edu

Preview meta tags from the dissect.csail.mit.edu website.

Linked Hostnames

15- 4 links todissect.csail.mit.edu

- 3 links topeople.csail.mit.edu

- 2 links tocolab.research.google.com

- 1 link toaccessibility.mit.edu

- 1 link toarxiv.org

- 1 link tobzhou.ie.cuhk.edu.hk

- 1 link todoi.org

- 1 link togandissect.csail.mit.edu

Thumbnail

Search Engine Appearance

Understanding the Role of Individual Units in a Deep Network

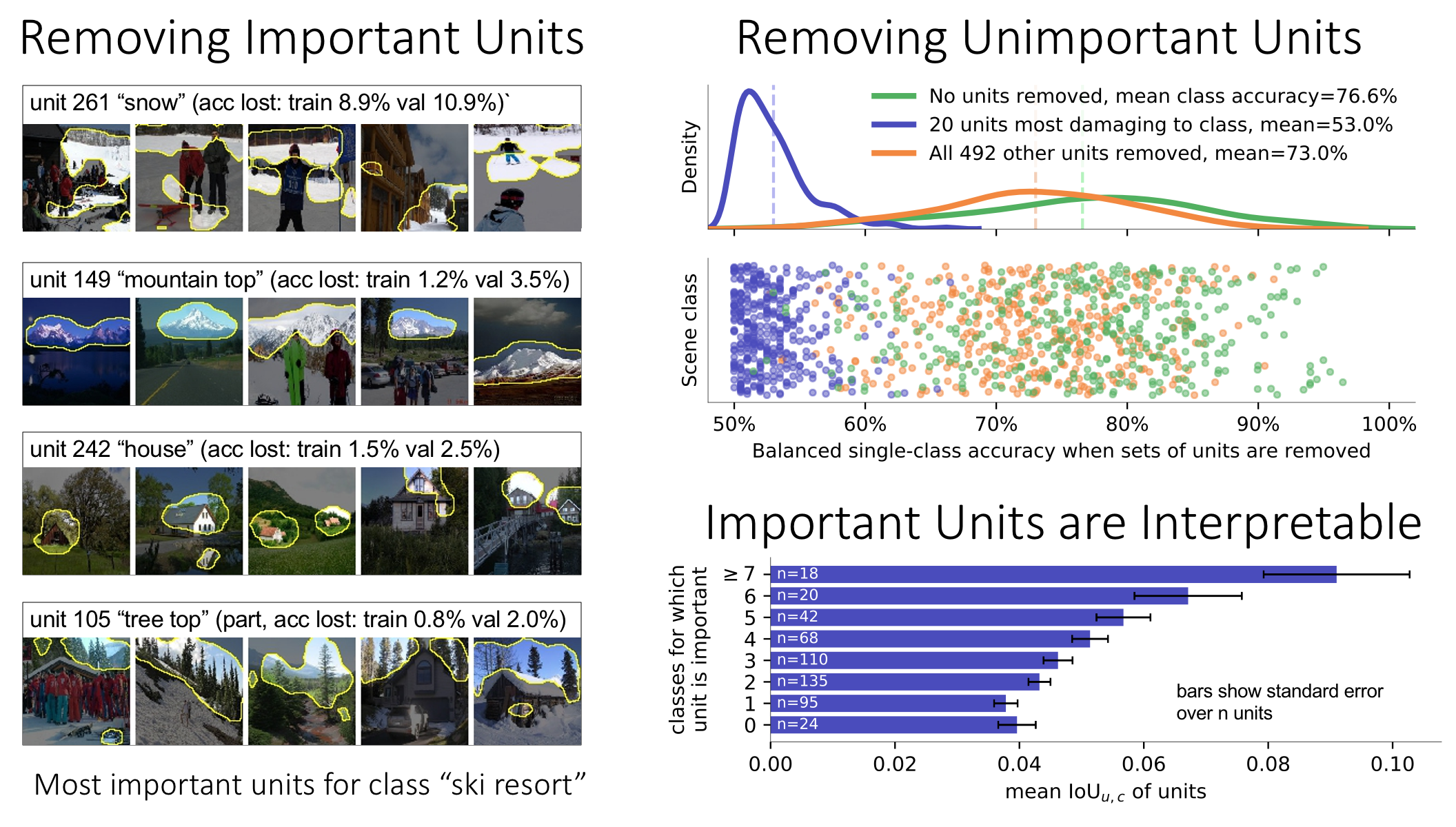

Deep neural networks excel at finding hierarchical representations that solve complex tasks over large data sets. How can we humans understand these learned representations? In this work, we present network dissection, an analytic framework to systematically identify the semantics of individual hidden units within image classification and image generation networks. First, we analyze a convolutional neural network (CNN) trained on scene classification and discover units that match a diverse set of object concepts. We find evidence that the network has learned many object classes that play crucial roles in classifying scene classes. Second, we use a similar analytic method to analyze a generative adversarial network (GAN) model trained to generate scenes. By analyzing changes made when small sets of units are activated or deactivated, we find that objects can be added and removed from the output scenes while adapting to the context. Finally, we apply our analytic framework to understanding adversarial attacks and to semantic image editing.

Bing

Understanding the Role of Individual Units in a Deep Network

Deep neural networks excel at finding hierarchical representations that solve complex tasks over large data sets. How can we humans understand these learned representations? In this work, we present network dissection, an analytic framework to systematically identify the semantics of individual hidden units within image classification and image generation networks. First, we analyze a convolutional neural network (CNN) trained on scene classification and discover units that match a diverse set of object concepts. We find evidence that the network has learned many object classes that play crucial roles in classifying scene classes. Second, we use a similar analytic method to analyze a generative adversarial network (GAN) model trained to generate scenes. By analyzing changes made when small sets of units are activated or deactivated, we find that objects can be added and removed from the output scenes while adapting to the context. Finally, we apply our analytic framework to understanding adversarial attacks and to semantic image editing.

DuckDuckGo

Understanding the Role of Individual Units in a Deep Network

Deep neural networks excel at finding hierarchical representations that solve complex tasks over large data sets. How can we humans understand these learned representations? In this work, we present network dissection, an analytic framework to systematically identify the semantics of individual hidden units within image classification and image generation networks. First, we analyze a convolutional neural network (CNN) trained on scene classification and discover units that match a diverse set of object concepts. We find evidence that the network has learned many object classes that play crucial roles in classifying scene classes. Second, we use a similar analytic method to analyze a generative adversarial network (GAN) model trained to generate scenes. By analyzing changes made when small sets of units are activated or deactivated, we find that objects can be added and removed from the output scenes while adapting to the context. Finally, we apply our analytic framework to understanding adversarial attacks and to semantic image editing.

General Meta Tags

5- titleUnderstanding the Role of Individual Units in a Deep Network

- viewportwidth=device-width,initial-scale=1

- descriptionDeep neural networks excel at finding hierarchical representations that solve complex tasks over large data sets. How can we humans understand these learned representations? In this work, we present network dissection, an analytic framework to systematically identify the semantics of individual hidden units within image classification and image generation networks. First, we analyze a convolutional neural network (CNN) trained on scene classification and discover units that match a diverse set of object concepts. We find evidence that the network has learned many object classes that play crucial roles in classifying scene classes. Second, we use a similar analytic method to analyze a generative adversarial network (GAN) model trained to generate scenes. By analyzing changes made when small sets of units are activated or deactivated, we find that objects can be added and removed from the output scenes while adapting to the context. Finally, we apply our analytic framework to understanding adversarial attacks and to semantic image editing.

- twitter:descriptionProceedings of the National Acadmy of Sciences, Sep 2020. Removing units from deep networks reveals the structure of generators and classifiers.

- twitter:imagehttps://dissect.csail.mit.edu/image/social_image.png

Open Graph Meta Tags

4- og:titleUnderstanding the Role of Individual Units in a Deep Network

- og:urlhttps://dissect.csail.mit.edu/

- og:imagehttps://dissect.csail.mit.edu/image/social_image.png

- og:descriptionProceedings of the National Acadmy of Sciences, Sep 2020. Removing units from deep networks reveals the structure of generators and classifiers.

Twitter Meta Tags

2- twitter:cardsummary

- twitter:titleUnderstanding the Role of Individual Units in a Deep Network

Link Tags

3- stylesheethttps://maxcdn.bootstrapcdn.com/bootstrap/4.0.0-alpha.6/css/bootstrap.min.css

- stylesheethttps://fonts.googleapis.com/css?family=Open+Sans:300,400,700

- stylesheet/style.css

Links

21- http://bzhou.ie.cuhk.edu.hk

- http://dissect.csail.mit.edu/datasets

- http://dissect.csail.mit.edu/models

- http://dissect.csail.mit.edu/models/segmodel

- http://gandissect.res.ibm.com/ganpaint.html?project=churchoutdoor&layer=layer4