blog.vllm.ai/2024/07/23/llama31.html

Preview meta tags from the blog.vllm.ai website.

Linked Hostnames

10- 3 links toblog.vllm.ai

- 3 links todocs.vllm.ai

- 2 links towww.linkedin.com

- 1 link tocentml.ai

- 1 link togithub.com

- 1 link tolambdalabs.com

- 1 link toneuralmagic.com

- 1 link towww.anyscale.com

Thumbnail

Search Engine Appearance

Announcing Llama 3.1 Support in vLLM

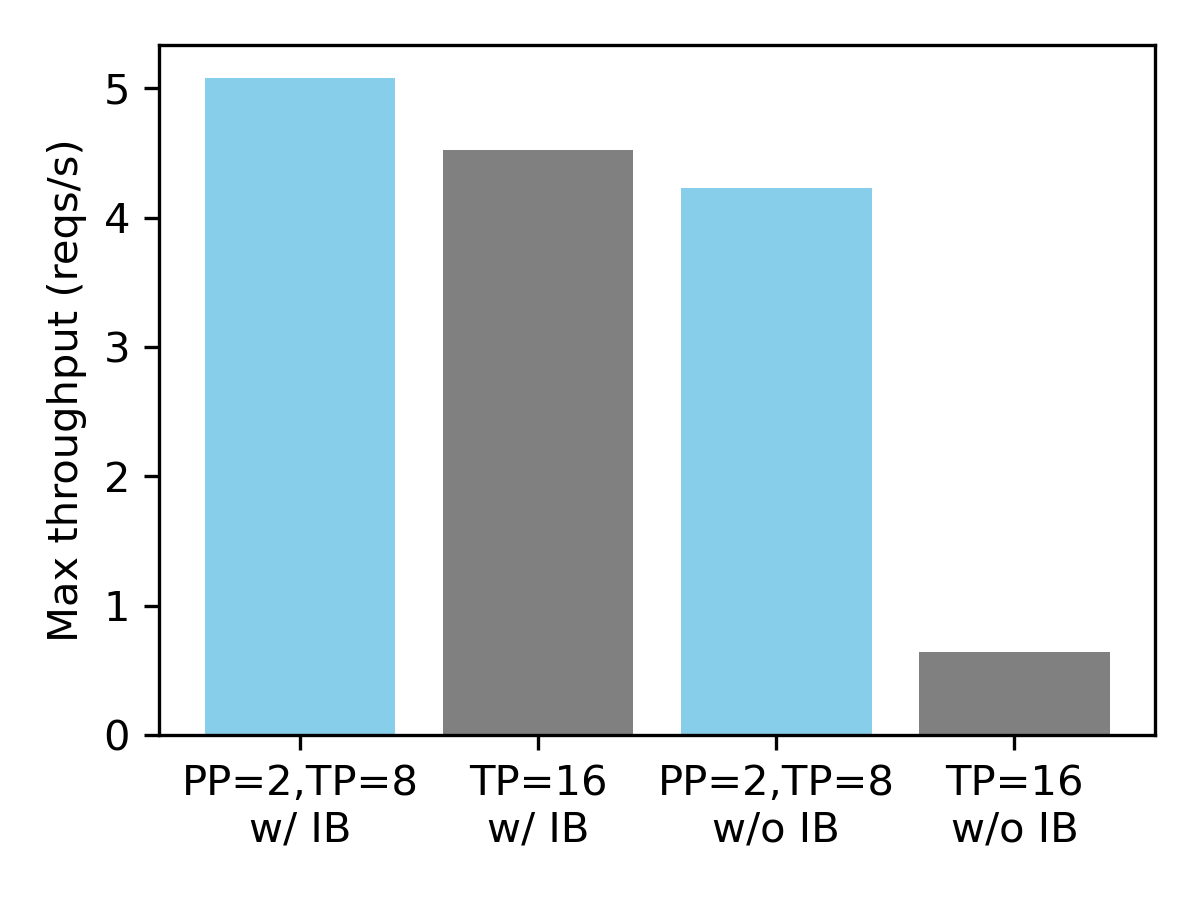

Today, the vLLM team is excited to partner with Meta to announce the support for the Llama 3.1 model series. Llama 3.1 comes with exciting new features with longer context length (up to 128K tokens), larger model size (up to 405B parameters), and more advanced model capabilities. The vLLM community has added many enhancements to make sure the longer, larger Llamas run smoothly on vLLM, which includes chunked prefill, FP8 quantization, and pipeline parallelism. We will introduce these new enhancements in this blogpost.

Bing

Announcing Llama 3.1 Support in vLLM

Today, the vLLM team is excited to partner with Meta to announce the support for the Llama 3.1 model series. Llama 3.1 comes with exciting new features with longer context length (up to 128K tokens), larger model size (up to 405B parameters), and more advanced model capabilities. The vLLM community has added many enhancements to make sure the longer, larger Llamas run smoothly on vLLM, which includes chunked prefill, FP8 quantization, and pipeline parallelism. We will introduce these new enhancements in this blogpost.

DuckDuckGo

Announcing Llama 3.1 Support in vLLM

Today, the vLLM team is excited to partner with Meta to announce the support for the Llama 3.1 model series. Llama 3.1 comes with exciting new features with longer context length (up to 128K tokens), larger model size (up to 405B parameters), and more advanced model capabilities. The vLLM community has added many enhancements to make sure the longer, larger Llamas run smoothly on vLLM, which includes chunked prefill, FP8 quantization, and pipeline parallelism. We will introduce these new enhancements in this blogpost.

General Meta Tags

10- titleAnnouncing Llama 3.1 Support in vLLM | vLLM Blog

- charsetutf-8

- X-UA-CompatibleIE=edge

- viewportwidth=device-width, initial-scale=1

- generatorJekyll v3.10.0

Open Graph Meta Tags

7- og:titleAnnouncing Llama 3.1 Support in vLLM

og:locale

en_US- og:descriptionToday, the vLLM team is excited to partner with Meta to announce the support for the Llama 3.1 model series. Llama 3.1 comes with exciting new features with longer context length (up to 128K tokens), larger model size (up to 405B parameters), and more advanced model capabilities. The vLLM community has added many enhancements to make sure the longer, larger Llamas run smoothly on vLLM, which includes chunked prefill, FP8 quantization, and pipeline parallelism. We will introduce these new enhancements in this blogpost.

- og:urlhttps://blog.vllm.ai/2024/07/23/llama31.html

- og:site_namevLLM Blog

Twitter Meta Tags

1- twitter:cardsummary_large_image

Link Tags

4- alternatehttps://blog.vllm.ai/feed.xml

- canonicalhttps://blog.vllm.ai/2024/07/23/llama31.html

- stylesheethttps://cdn.jsdelivr.net/npm/@fortawesome/fontawesome-free@latest/css/all.min.css

- stylesheet/assets/css/style.css

Links

15- https://blog.vllm.ai

- https://blog.vllm.ai/2024/07/23/llama31.html

- https://blog.vllm.ai/feed.xml

- https://centml.ai

- https://docs.vllm.ai